We’ve been building conversational AI agents for insurance companies for a few years now. We’ve done extensive manual testing for all of our customers. But until recently, we didn’t have an automated evaluation framework.

This post is about why we built one, what we learned, and why we think every team deploying conversational agents needs something like this. We’ll cover the concepts and our journey—a future post will dive deeper into the technical implementation.

The problem that forced our hand

We had a customer we worked with for about a year. They’d give us feedback in one direction, we’d implement it, and then they’d give us feedback in the opposite direction. The agent’s responses were too long. Then too short. More detail. Less detail.

The agent wasn’t the problem. The problem was that nobody—not us, not them, not even their own team—had defined what “correct” actually meant.

Different people on their team had different opinions. Some thought responses needed to be comprehensive. Others wanted brevity. Every time we fixed something one person cared about, we broke something someone else cared about. And because we were updating frequently, the agent kept changing—which made them uncomfortable. They couldn’t trust it would behave consistently.

We were stuck in a loop that was never going to converge. We needed a way to define “correct” upfront, get alignment, and prove the agent was meeting the bar.

Why conversational agents are hard to evaluate

This particular customer’s agent—which we designed and built for them—provides guidance on medical and family issues for their users. People come looking for help with real problems. The stakes are high—regulatory compliance matters, customer trust matters, and the information needs to be accurate.

One thing I’ve noticed: we hold AI to a higher standard than humans. We accept that a human consultant might not know everything. But we expect the AI to be right 100% of the time.

And conversational agents face a uniquely difficult evaluation problem. Unlike a classification model where you can check if the output matches the expected label, there’s very little determinism. Someone could ask almost anything. The agent might need to pull from multiple knowledge bases, understand nuanced context, and know when to escalate versus when to answer directly.

How do you evaluate something with infinite possible inputs and context-dependent “correct” answers?

What inspired our approach

I was reading Anthropic’s blog posts about building evaluation frameworks, and it clicked. They talk about how having robust evaluations is critical—not just for quality control, but for enabling capability expansion. If you can measure how your agents perform, you can improve them systematically. If you can prove they meet a benchmark, you can promise that to customers.

We decided to build our own evaluation harness using LangSmith’s evaluation framework as the foundation. LangSmith handles the orchestration—running test cases, collecting results, managing experiments—which let us focus on the evaluation logic itself.

The core concept is straightforward:

Rubrics — A set of rules that define what “good” looks like for a specific agent. Rules like:

- Responses should use no more than three bullet points

- Answers should stay under 500 characters

- Emergency situations require immediate, concise guidance

- The agent should escalate to a human when it detects distress

Each rule has a severity level (high, medium, low) that we define with the customer. High-severity rules cover safety-critical behavior. Lower-severity rules cover preferences.

Question Sets — A comprehensive set of questions covering scenarios we expect users to ask. Also developed with the customer.

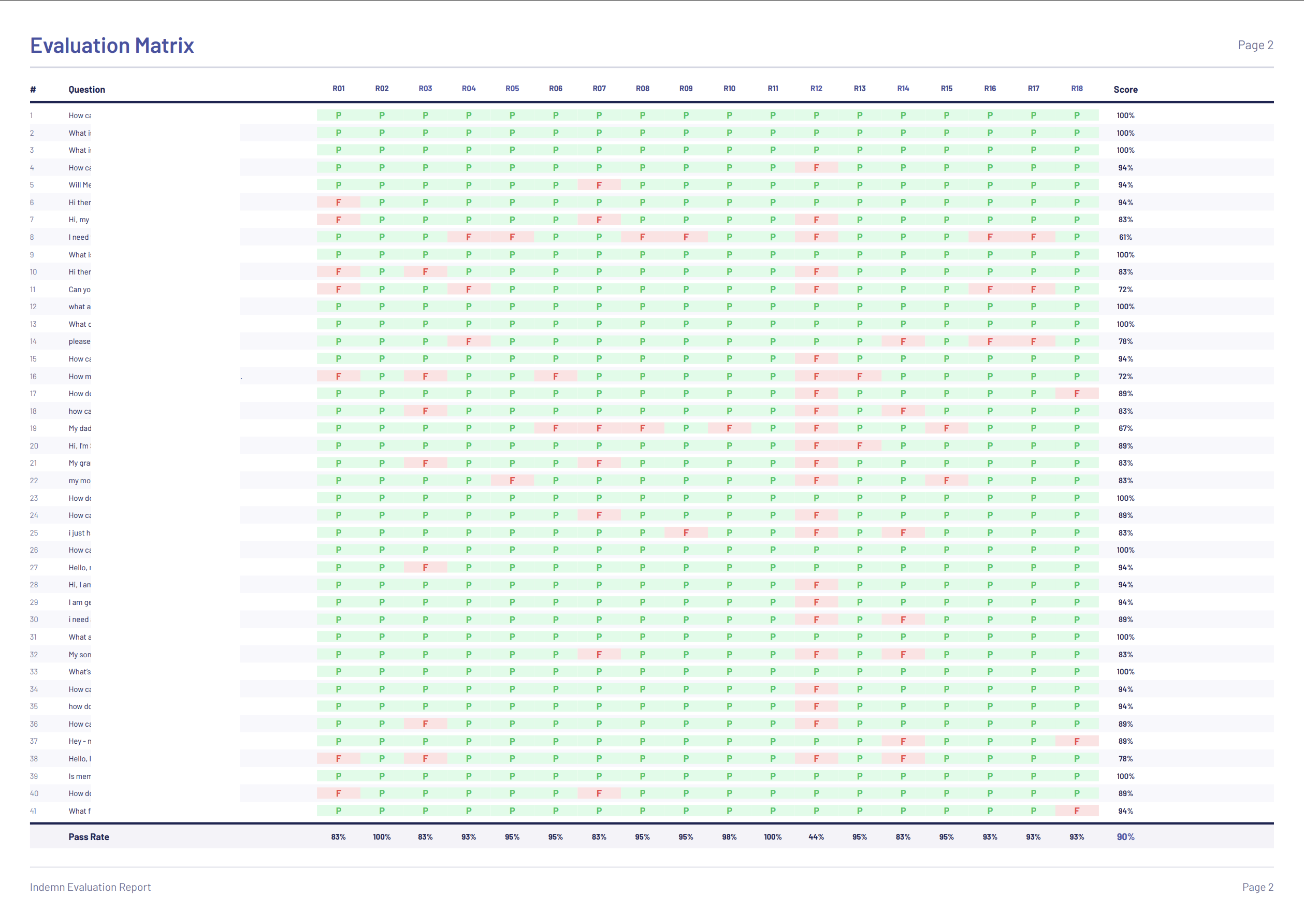

When we run an evaluation, every question goes to the agent. Every response gets scored against every rule by an LLM judge. The result is a matrix showing exactly where the agent passes and fails.

We built a React UI that displays this matrix, lets you drill down into individual results, and generates PDF reports for customers. Being able to visualize results clearly turned out to be just as important as generating them.

Why we use an LLM as the judge

You might wonder why we use an LLM to evaluate responses instead of deterministic checks. We could write code to count bullet points.

But the right answer often depends on context. If someone asks an emergency question about a family member’s health crisis, three concise bullet points with critical information might be exactly right. The same response to a casual question might feel too terse.

An LLM judge can evaluate: “Given this question and this context, did the response follow the rule appropriately?” It understands intent, not just patterns.

This does mean every evaluation run has a cost—you’re making LLM calls for each question-rule combination. For a rubric with 20 rules and a question set with 50 questions, that’s 1,000 judge calls per run. We use Claude Haiku for judging to keep costs reasonable while maintaining quality. It’s a tradeoff worth understanding if you’re building something similar.

The flexibility comes with another tradeoff—calibration. Our first run scored the agent at 30%. Not because the agent was bad, but because we hadn’t given the judge enough context. Too little context and it grades too harshly. Too much and it gets confused.

Finding the sweet spot took iteration. That same agent now scores at 91%.

What we learned along the way

Building the evaluation is itself iterative. You can’t design the perfect rubric upfront. You run it, see where the scores don’t match reality, and refine. The 30% → 91% journey wasn’t about improving the agent—it was about improving how we measure.

Every agent is different. We tried to build something universal at first. It didn’t work. Customer-specific context matters too much. We had to scope down and accept that customization is the point, not a limitation. Our platform now stores rubrics and question sets per agent, with versioning so we can track changes over time.

Some rules conflict. We found rules that were impossible to satisfy simultaneously. “Be comprehensive” and “be brief” can fight each other. Part of the value is surfacing those conflicts and making explicit tradeoffs with the customer.

The goal is trust, not perfection. 91% isn’t 100%. But now we can show exactly which 9% fails and why. That transparency changed everything.

How it changed the conversation

Before this, we were having the same conversation over and over with that customer. “The agent isn’t right.” “We fixed it.” “Now something else is wrong.”

After building the evaluation framework, we have something to ground the discussion. We can show the matrix. We can point to specific rules and specific questions. We can measure progress.

Honestly, we were in a do-or-die situation—either we established trust or the relationship was over. The evaluation framework gave us a shared language. Instead of opinions, we have data. Instead of “it feels wrong,” we have “rule 7 is failing on questions 12, 23, and 41.”

We’re still working with them to improve, but the dynamic is completely different now.

Where we’re taking this

We’re rolling out rubric-based evaluations to all Indemn customers. Every agent we deploy will have:

- A clear rubric defining success criteria

- A question set covering expected scenarios

- Regular evaluation runs proving performance

- Visibility for both us and the customer through our platform

We’re also building RAG evaluation (testing knowledge base retrieval accuracy) and tool-calling evaluation (making sure agents use their tools correctly and at the right time).

The thing I’ve realized: your evaluation framework drives the capabilities you can provide. If you can measure performance on existing functionality, and build evaluations for new functionality before you ship, you have a benchmark for what’s possible.

If you have a benchmark, you can build almost anything.

If you’re working on conversational AI and thinking about evaluations, we’d love to hear from you. Reach out or follow what we’re building—including a deeper technical dive into how we built this system.